Tiny Stories

Paper Notes

April 2025

Abstract

(Paper Notes)

On 'TinyStories: How Small Can Language Models Be and Still Speak Coherent English?' by Eldan and Li

Language Models (LMs) are small but struggle to produce coherent and fluent text when they are small -- models smaller than 125M parameters struggle to generate coherent / consistent english, even after training

Definitely due to magnitude of dataset.

Present TinyStories, synthetic dataset of short stories -- words for only 3/4 year olds.

They can be used to train and evaluate LMs that are smaller than SOTA models (below 10 million parameters) or models that have simple architectures (1 transformer block).

Introduction

Given a prompt:

Tom and Jane are friends. One day, Jane goes to Tom’s house. Tom has a big pot of soup. He wants to

share it with Jane. “Jane, do you want some soup?” Tom asks. “Yes, please. It looks yummy,” Jane says.

Tom pours some soup into two bowls. He gives one bowl to Jane. Jane takes a spoonful of soup, but then

she makes a face. The soup is

a 28M param model:

very bitter. She does not like it. She says, “I don’t like this soup. It is too bitter.”

Tom is sorry. He says, “I’m sorry, Jane. I didn’t know you don’t like bitter soup. I will make you something

else.” He looks around the kitchen and finds some bread and cheese. He puts them on the table and says,

“Here, Jane. You can have some bread and cheese. They are not bitter. They are sweet and yummy.”

Jane is happy. She says, “Thank you, Tom. You are a good friend. I like bread and cheese. They are not

bitter.”

vs GPT2-XL 1.5B

too old. Tom scowls.

“Don’t be that way! Your soup has to be fresh like your daughter,” Tom says, angry and disappointed.

“Bring me some more,” Jane cries. “No! Go away, I like my soup old and fresh!”

Description of Dataset

Use GPT3.5 / 4 to generate synthetic data given a set of instructions and a concise vocabulary.

The vocabulary consists of 1500 basic words which t ry to mimic the speech of a 3/4 year old chil:w

a.

In each generation, 3 words are randomly chosen (one verb, one noun, and one adjective) to generate a story -- that somehow combines words into the story.

Given

Write a short story (3-5 paragraphs) which only uses very simple words that a 3 year old child would likely understand. The story should use the verb ”decorate”, the noun ”thunder” and the adjective ”ancient”. The story

should have the following features: the story should contain at least one dialogue, the story has a bad ending.

Remember to only use simple words!

GPT3.5/4 generates:

Once upon a time, in an ancient house, there lived a girl named Lily. She loved to decorate her room with pretty things. One

day, she found a big box in the attic. She opened it and saw many shiny decorations. Lily was very happy and decided to use

them in her room.

As Lily was decorating her room, the sky outside became dark. There was a loud thunder sound, and Lily got scared. She ran

to her mom and said, ”Mommy, the thunder is so loud!” Her mom hugged her and said, ”Don’t worry, it will pass soon.”

But the thunder did not stop. It got louder and louder, and the ancient house started to shake. Suddenly, the roof fell down

on the pretty decorations. Lily was sad because her room was not pretty anymore. The end.

TinyStories-Instruct

There is a variant of the dataset that is used to instruction-tune a language model.

Instructions are of 4 types:

A sample look as:

Summary: Lily and Timmy build a sandcastle together and learn to compromise, but it gets knocked over by a gust of wind.

They find beauty in the broken sandcastle and play happily with a butterfly.

Features: Dialogue, Foreshadowing, Twist

Sentence: One day, she went to the park and saw a beautiful butterfly.

Words: disagree, network, beautiful

Story: Once upon a time, there was a little girl named Lily. She loved to play with her toys and her friends. One day, she went to the park and saw a beautiful butterfly. She tried to catch it, but it flew away. Lily’s friend, Timmy, came to play with her.

They decided to build a sandcastle, but they disagreed on how to build it. Lily wanted to make it tall, while Timmy wanted to

make it wide. They argued for a while, but finally agreed to make it both tall and wide. As they were building the sandcastle,

they noticed a group of ants working together. They realized that the ants were building a network of tunnels. Suddenly, a gust

of wind came and knocked over their sandcastle. They were sad, but then they saw the butterfly again. It landed on the remains

of their sandcastle and they realized that it was still beautiful, even in its broken state. They smiled and played together happily.

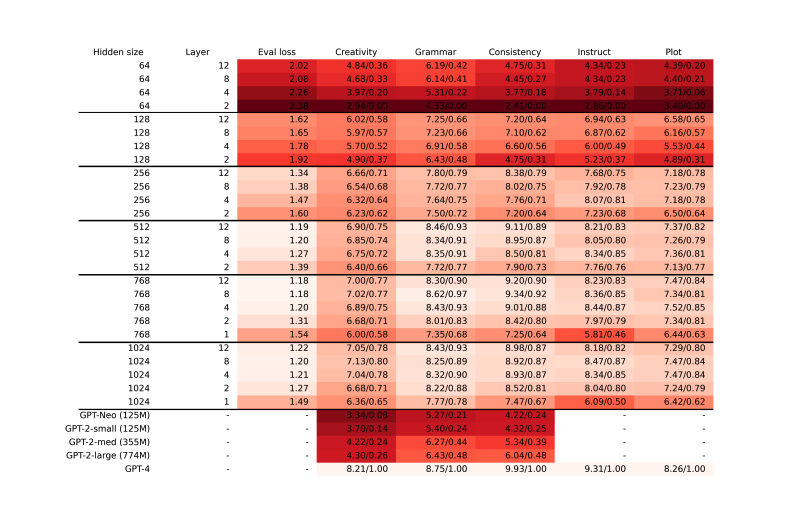

Performance of SLMs on TinyStories

SLMs range from between 1M to 35M parameters, whose layers range between 1 and 8 layers -- and can be trained on a single V100 GPU within 30 hours.

On a single H100, probably between 6-10 hours

Assuming Hidden size is equivalent to and layer refers to the count of transformer blocks.